Sesame AI is behind one of the best conversation speech models we have seen. The company has now released the CSM 1B (Conversational Speech Model). It is trained on 1m hours of data. It is contextually aware to produce intelligent speech. As explained:

The model architecture employs a Llama backbone and a smaller audio decoder that produces Mimi audio codes.

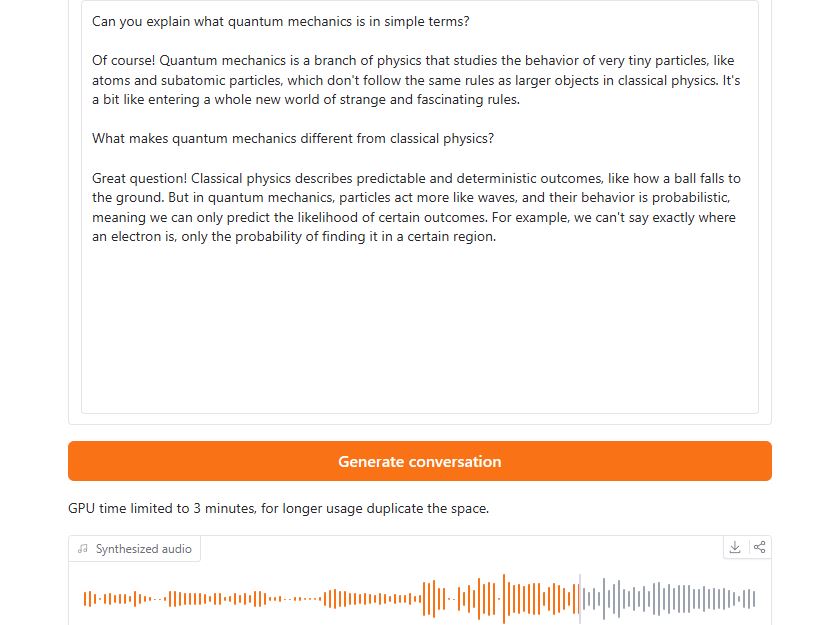

For this, you are going to need a CUDA-compatible GPU and Python 3.10. For some operations, you are going to need ffmpeg. Here is an example of what can be done with this:

[HT]