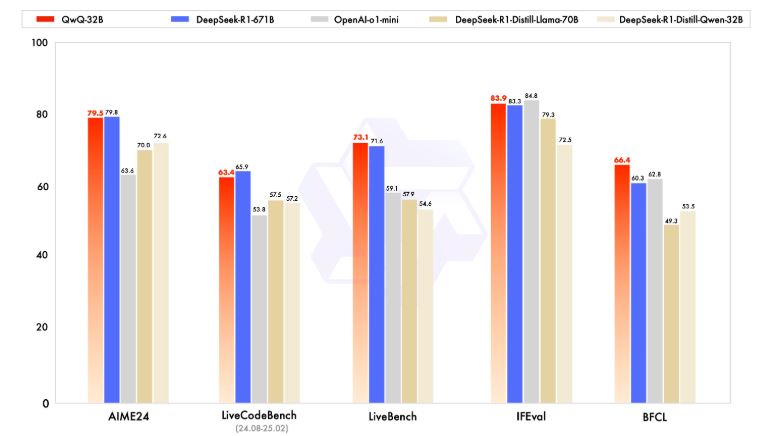

DeepSeek excited many AI fans with its R1 model a while ago. Since then, we have seen plenty of other powerful models. Qwen’s QwQ-32B is a new model with 32b parameters that can achieve a performance comparable to DeepSeek R1., which has 671b parameters. This is achieved with reinforcement learning. QwQ-32B is open-weight in Hugging Face and ModelScope.

This model is already available for testing online. You will now be able to access it with the Qwen2.5-Plus + Thinking in the chat.

🥝 Yesterday we opensourced QwQ-32B, and we put the model on Qwen2.5-Plus + Thinking in Qwen Chat. Based on your feedback, we make a change and put QwQ-32B on the model list of Qwen Chat, and thus you can directly access it by choosing this model. Enjoy and feel free to give us… https://t.co/WrJX9PR8yE pic.twitter.com/RdiKuJInwy

— Qwen (@Alibaba_Qwen) March 6, 2025

[HT]