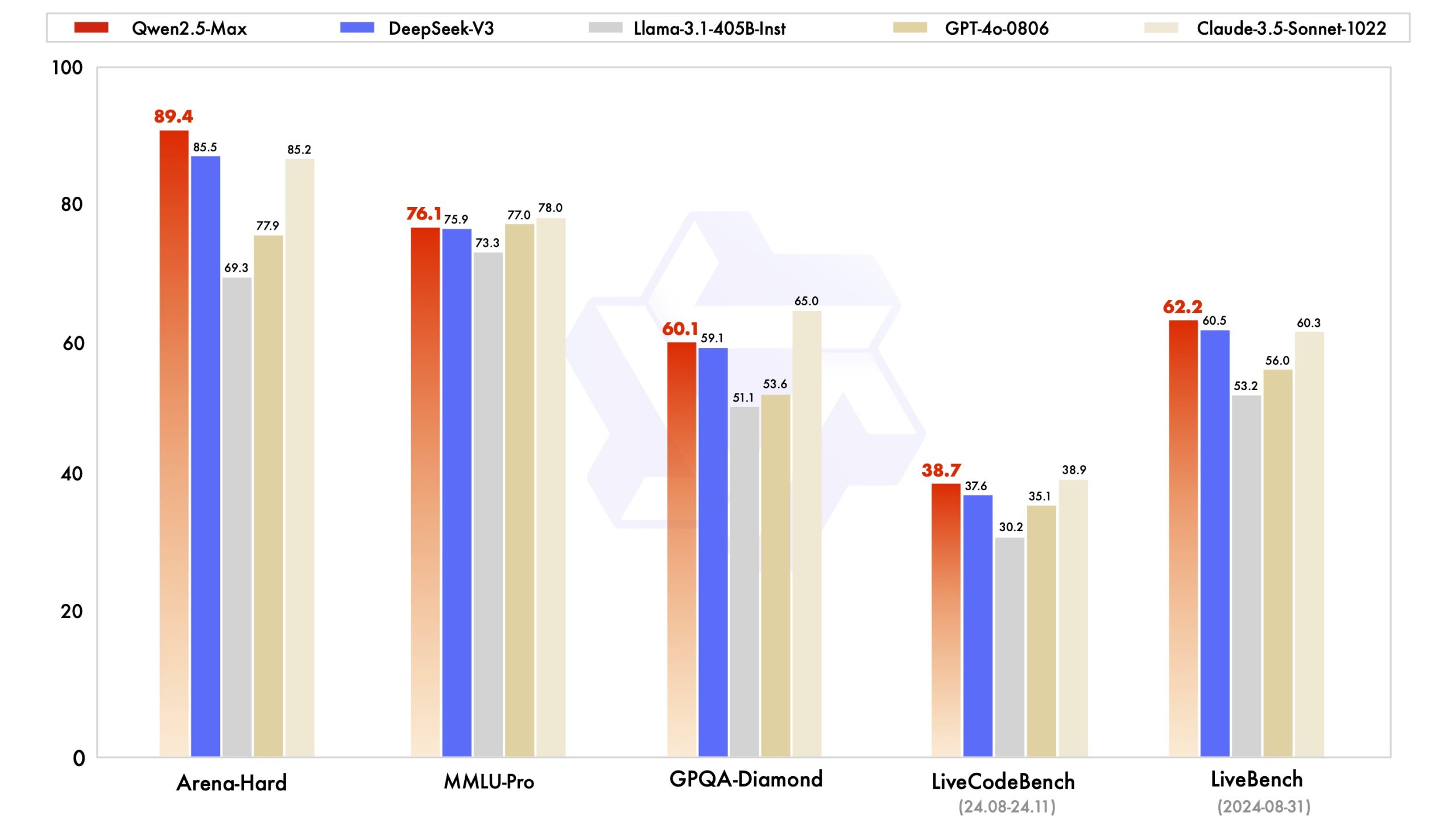

DeepSeek has gotten a lot of coverage in the media lately but it’s not the only new AI model worth testing. Qwen2.5-Max is a large MoE LLM pretrained on data and post trained with supervised fine tuning and RLHF. According to Alibaba, it can compete against some of the best models around like GPT-4o and Claude 3.5 Sonnet.

The burst of DeepSeek V3 has attracted attention from the whole AI community to large-scale MoE models. Concurrently, we have been building Qwen2.5-Max, a large MoE LLM pretrained on massive data and post-trained with curated SFT and RLHF recipes. It achieves competitive… pic.twitter.com/oHVl16vfje

— Qwen (@Alibaba_Qwen) January 28, 2025

There is already a chat interface available for it, which you can use to generate videos or interact with the model. You can also find it on Hugging Face.