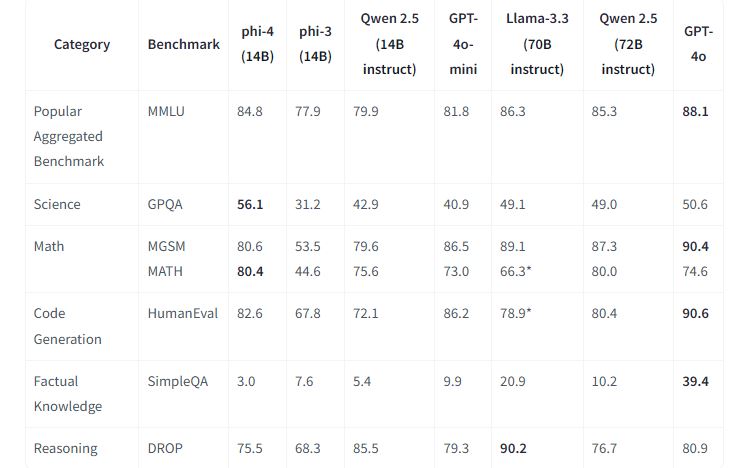

We covered Microsoft’s Phi 4 model a few weeks ago. It is a 14B parameter SLM that outperforms larger models in math problems. It is now available on Hugging Face. It has 14B parameters and a context length of 16k tokens. This model was trained with data focused on advanced reasoning. As explained by Microsoft, this model is trained on publicly available documents, text-book like data, academic books and Q&A datasets and “chat format supervised data covering various topics to reflect human preferences on different aspects such as instruct-following, truthfulness, honesty and helpfulness.”

The above table shows where Phi 4 stands.

[HT]