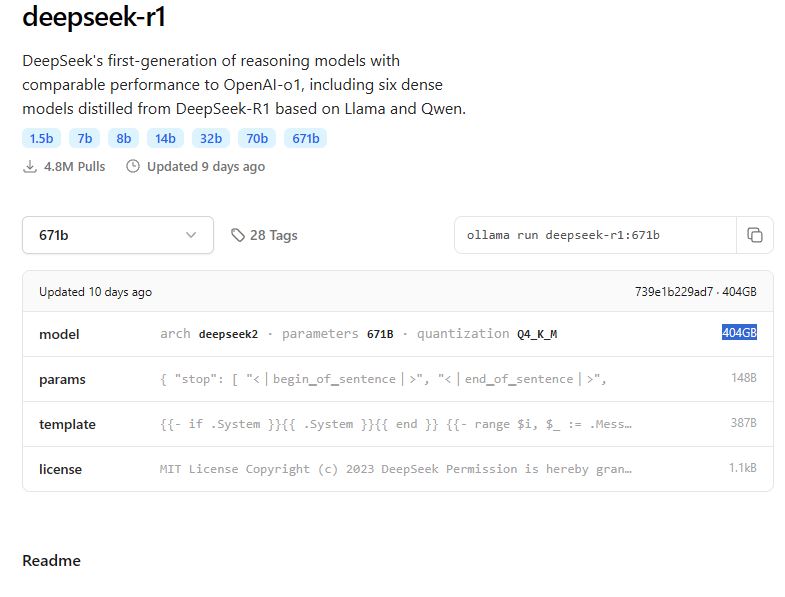

DeepSeek R1 has generated a lot of excitements in the AI industry. While OpenAI is responding with o3 and o3-mini later today, plenty of companies are racing to add support for DeepSeek R1, including Perplexity and Windsurf. Thanks to NVIDIA, you can now try the the 671-billion-parameter DeepSeek-R1 model to build your own agents. Keep in mind, this is the model that is over 400GB if you try to run it locally.

This model is now available as an NVIDIA NIM microservice in preview. It can deliver up to 3,872 tokens per second on a single NVIDIA HGX H200 system. As the company explains:

Delivering real-time answers for R1 requires many GPUs with high compute performance, connected with high-bandwidth and low-latency communication to route prompt tokens to all the experts for inference. Combined with the software optimizations available in the NVIDIA NIM microservice, a single server with eight H200 GPUs connected using NVLink and NVLink Switch can run the full, 671-billion-parameter DeepSeek-R1 model at up to 3,872 tokens per second.

[HT]